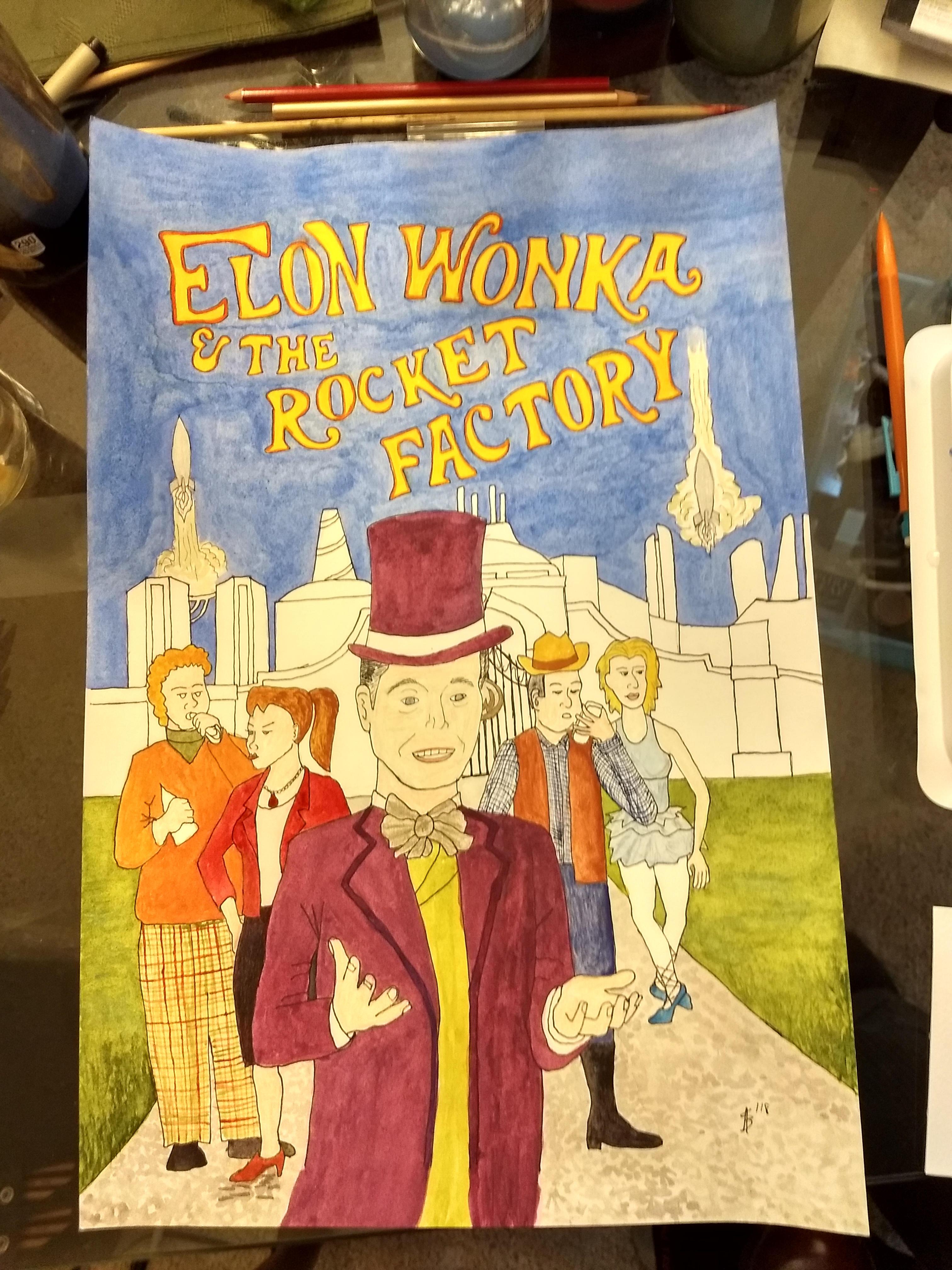

Hi! I am currently vying for two Nobel Prizes, and much like the Emmy Grammy Oscar and Tony awards I have given myself, I have awarded these prehumously. (No one ever uses that do they? It seems like a natural logical extension of that other thing they use all the damn time like being ungrateful louts is codified or something.)

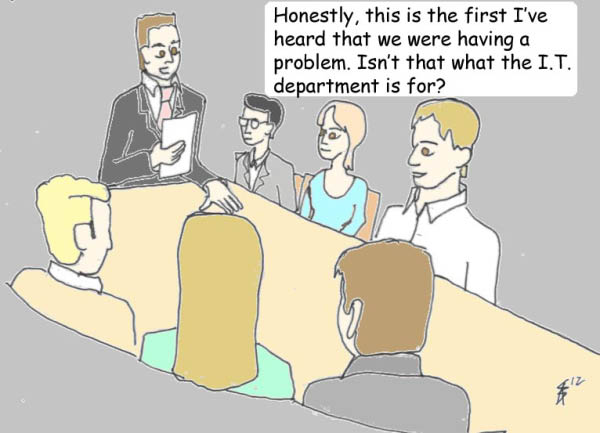

One is for Economics for my Unified Purse Theory. This is a theory brought about by noting the number of business decisions that I see getting made on the rationale “it’s not coming out of MY budget so hey.”

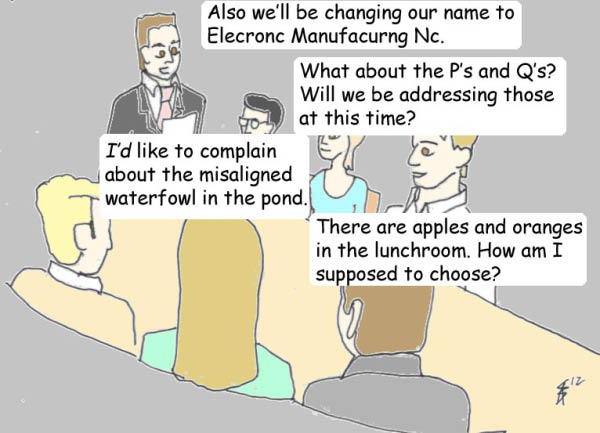

I have invented the observation that “it doesn’t matter whose purse it’s coming out of WE’RE STILL PAYING FOR IT.” Does your plan save us millions in hardware but cost us hundreds of millions in travel? THEN IT’S A HUGE LOSS and also NO.

Divisions in the purses are for blame-gaming later, or micro-adjustments in strategy. People say everything is about the bottom line but no one is anywhere near really looking at the bottom line, their path is all full of asterisks and footnotes.

I am serious about wanting the Nobel for this. It’s a concept of considerable elegance and should be causing you to wonder if maybe I’m as crazy as John Nash.

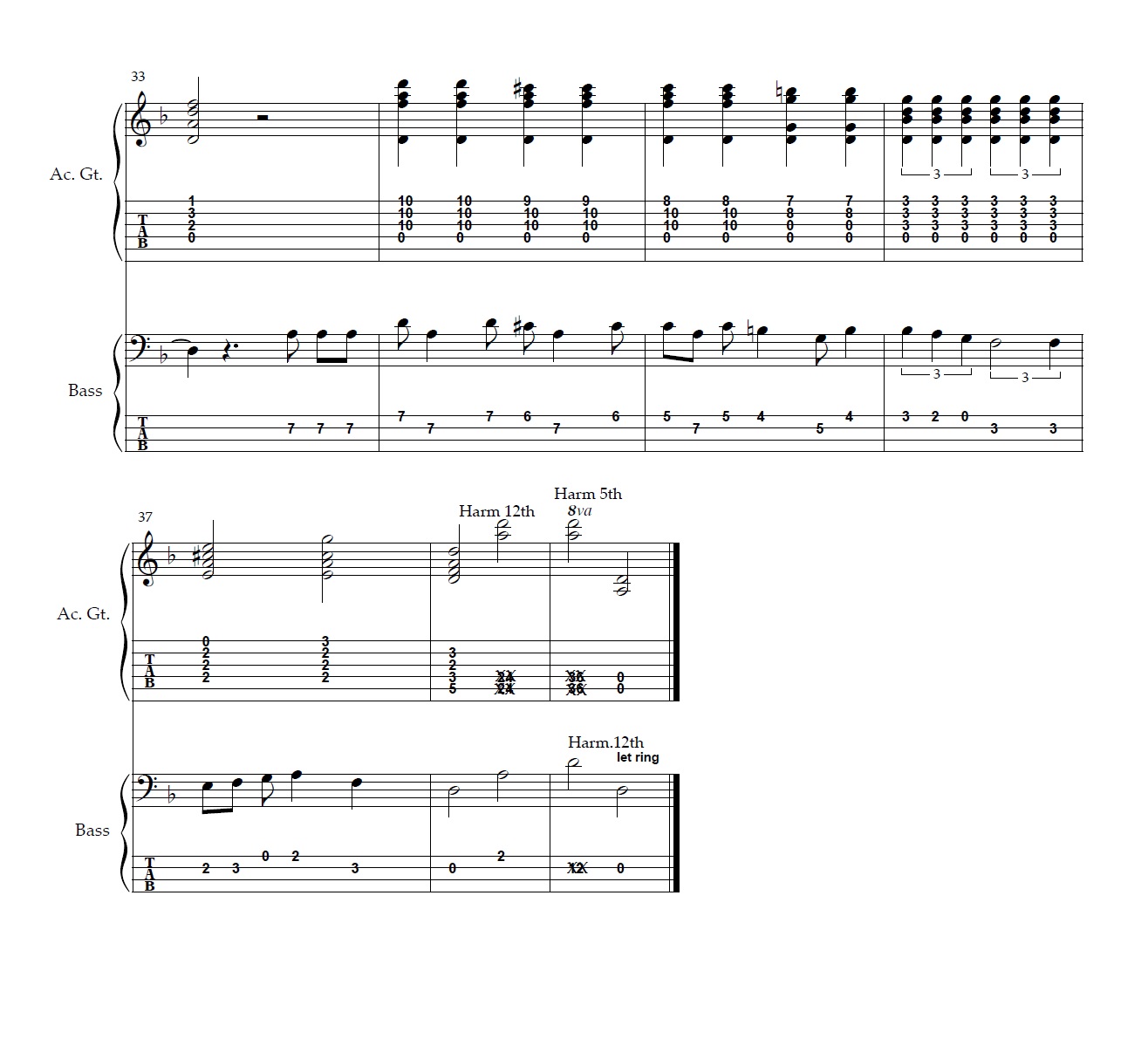

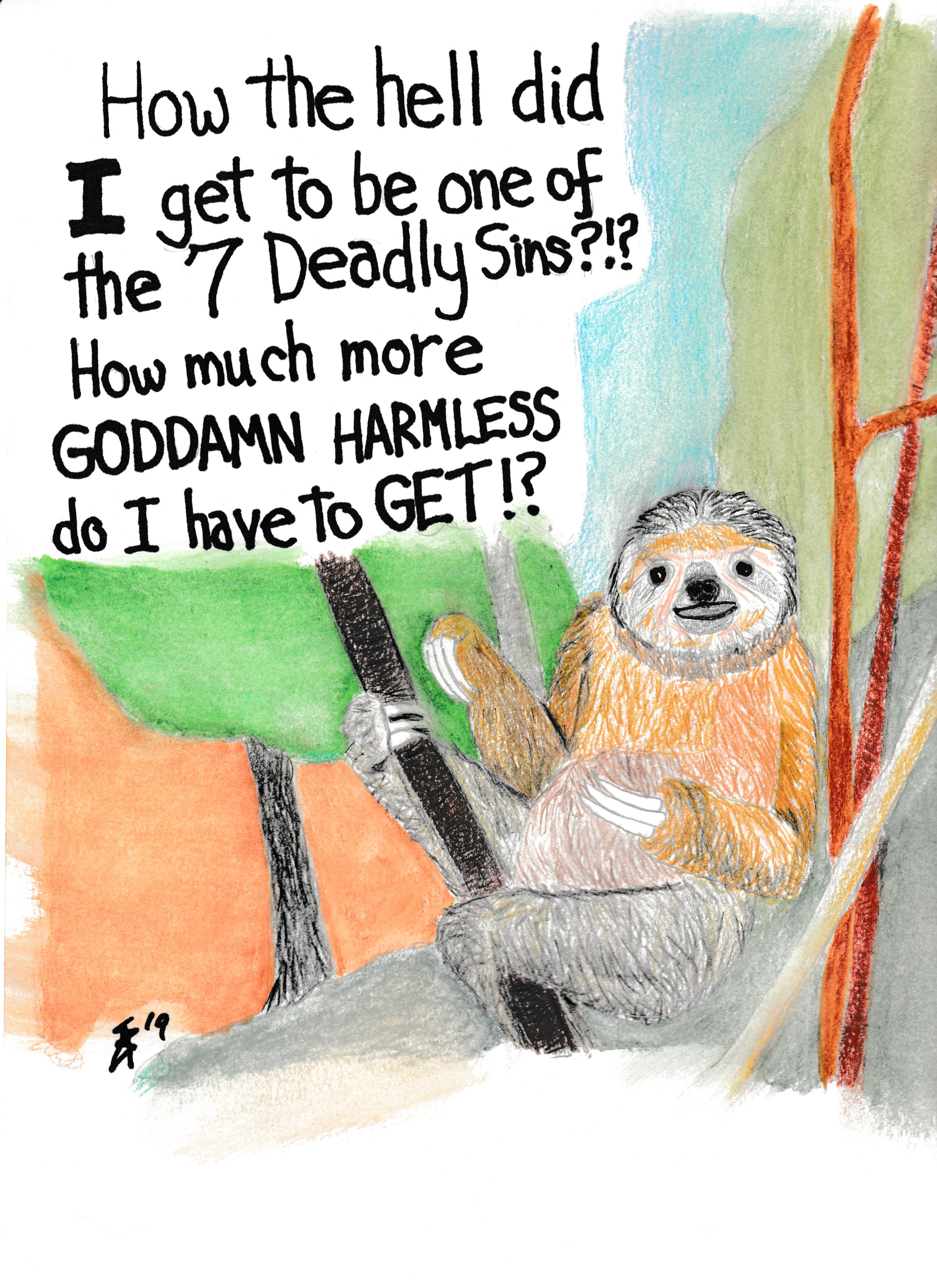

The other is for what I call the Burns Limit. It’s a soft target full of fuzzy logic that sounds like a formula, and the idea is this: There is a number of words past which your sentence has lost the ability to transfer information, and this number will always be less than needed to make any effort to be completely delineated and prohibitive of misinterpretation. This happens in science and law all the time; by the time you’ve “partied of the first part” and “subsequent of the second point of contention”ed yourself into precise specifications, you look in the rearview and see Too Long Didn’t Read about three miles back.

In order to be understood, we expect a certain amount of synapse-bridging and assumptions and agreements and we put things concisely. We try not to exceed the Burns Limit, and if we have to clear up an occasional failure to bridge, well… clearing up isn’t hard unless you’re up against someone who doesn’t WANT to understand.

I don’t really think that idea deserves a Nobel, but I’d submit it in Economics anyway to get them considering me for the first one…